False articles, doctored videos, and accounts on X used to deceive journalists: Operation “Matryoshka” is flooding social media. Accused of cyber interference, Russia continues its disinformation campaigns despite sanctions, within the context of the war in Ukraine.

Disinformation as a Tool of Influence

In the digital age, disinformation has become a weapon capable of manipulating public opinion and even influencing certain political decisions. This planned strategy aims to inject false information into the online information flow. This practice is part of what some call the « information war ». It is often used by Russia to create confusion and destabilize other nations.

Russian Cyber Malfeasance

Since the Cold War, Russia has perfected the art of disinformation to influence public opinion and destabilize the West. These disinformation campaigns have multiplied since the invasion of Ukraine in 2022, combining cyberattacks, pro-Russian propaganda, and information manipulation. The goal is often to discredit information from Ukraine and its allies, while justifying Russian military actions. An example of these large-scale campaigns is Operation Doppelgänger. It creates fake articles from credible media outlets shared on social media. More recently, the Matryoshka Operation has taken place on X (ex-Twitter).

The Launch of the Matryoshka Disinformation Campaign

Launched on September 5, 2023, the Matryoshka campaign began with a fake Fox News report, asking several media outlets to verify its authenticity. The operation, identified on X by the collective Antibot4Navalny (@antibot4navalny), which fights online Russian disinformation, was named « Matryoshka » (матрёшка in Russian), like the Russian nesting dolls that hide smaller dolls under each layer. Russian disinformation strategy work similarly, releasing successive levels of false or exaggerated information making source verification increasingly difficult.

“Please Verify This Information”

The campaign’s primary objective is to overwhelm journalists and fact-checkers with false information, forcing them to spend time on verification. Pro-Russian operators target accounts, asking them to verify fake content by sharing it or posting it in the comments of a post. Beyond disinformation, Moscow aims to spread online confusion by overwhelming media outlets and encouraging distrust toward institutions. France, for example, is a primary target due to its official support for Ukraine in the conflict with Russia.

Fake Content at the Heart of Russia’s Strategy

Between September 2023 and February 2024, VIGINUM, the French agency responsible for monitoring digital interference, identified over 90 actions linked to the Matri

oshka operation. The campaign relies on three types of fake content, translated into multiple languages (French, English, Italian, German, Russian, and Ukrainian) to reach a wider audience:

- Videos imitating the visual style of Western media, using identical logos and fonts,

- Fake screenshots of press articles, Instagram stories, or YouTube videos,

- Falsified official documents.

Created with AI or editing software, these contents are difficult for internet users to identify as false.

Ukraine as Russia’s Target

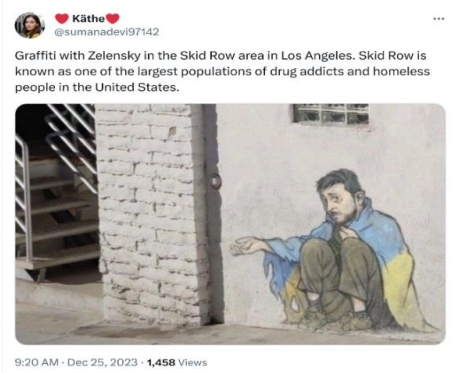

Ukraine is a central focus of these campaigns to weaken international support for Ukraine and discredit its allies. The fake contents often target the Ukrainian government. For example, its president, Volodymyr Zelensky, can be portrayed as a beggar or even a war criminal. To make some visuals more credible, Russian operators sometimes replicate the visual style of famous street artists, like the French artist Lekto.

Some of the content targets Ukrainians refugees to arouse tensions and hostility from Europeans. For instance, a fake report claimed that a Ukrainian refugee received €900,000 from the government. This promoted the idea that refugees abuse public aid. These campaigns aim to divide European public opinion and generate distrust towards Ukrainian refugees and the solidarity measures supporting Ukraine.

Examples of Fake Content

Fake report showing Alexei Navalny’s wife, a figure of opposition to the Russian regime, with other men. The aim is to discredit both her and the opposition.

(Screenshot from a Twitter publication of @antibot4navalny)

(Fake content created in the context of Matryoshka disinformation campaign)

Fake screenshot of a EuroNews report featuring a Ukrainian refugee abusing Italian government aid, to incite hostility towards Ukrainian refugees.

(Twitter archival by @antibot4navalny / https://archive.ph/XMWb6)

(Fake content created in the context of Matryoshka disinformation campaign)

Fake picture showing graffiti of Volodymyr Zelensky depicted as a beggar.

(Twitter archival by @antibot4navalny / https://archive.ph/VEFVh)

(Fake content created in the context of Matryoshka disinformation campaign)

Fake CIA message targeting the Paris 2024 Olympics.

(© « How Russia is trying to disrupt the 2024 Paris Olympic Games » by Clint Watts, published on June 2, 2024)

(Fake content created in the context of Matryoshka disinformation campaign)

Confirmed Russian Interference

VIGINUM’s investigation revealed that most of the content of the Matryoshka campaign

originates from Russian Telegram channels notorious for disinformation operations. This, paired with attempts to discredit governments and pro-Russian messages, confirms it as a case of foreign digital interference.

European Response to Foreign Interference

On October 8, 2024, the EU Council adopted a new sanctions regime against actors involved in Russian destabilization activities, threatening the security and values of the EU and its member states. Individuals and entities engaged in such operations will face sanctions, such as asset freezes, a ban on funding from Europeans citizens and businesses, entry and transit restrictions within the EU. These measures aim to reinforce the “strategic compass” adopted by the Council in 2022, to detect and counter foreign interference, an increasingly common threat. This will help the EU to respond more effectively to threats like cyberattacks, disinformation campaigns, sabotage of vital infrastructure, election manipulation, and other destabilizing activities.

European Sanctions Since 2014

These sanctions complement those previously implemented by the EU against Russia and its allies after the 2014 illegal annexation of Crimea and the 2022 invasion of Ukraine. The goal is to weaken key sectors of the Russian economy and reduce its war financing capability by isolating it economically. The latest sanctions package, the 14th, was adopted in June 2024. Sanctions can target individuals and entities in Russia or Belarus, as well as restricting Russian oil and gas imports, imposing financial sanctions, and banning certain Russian media within the EU. Recent Council reports highlighted the effectiveness of these measures, showing a notable decline in the Russian economy.

Despite the EU’s commendable efforts to prevent Russia from bypassing these sanctions, it remains uncertain whether Moscow will succeed to circumvent these measures, especially with the support of its allies.

« Liberal democracies cannot survive without information, reliable information, and trust in democratic processes, such as elections. To defend democracy and protect against manipulation, we must consider it a security threat and respond with equal strength to those attacking us. »

(Disinformation and Foreign Interference: Josep Borrell, High Representative and Vice-President of the European Commission, EEAS Conference, Brussels, 01/23/2024)

– Read the entire speech –

ANALYSIS OF OPERATION MATRYOCHKA

How does the operation work?

According to the report published by VIGINUM, Operation Matryochka operates mainly on X. It begins with a first group of accounts, known as “seeders”. They publish fake content at intervals of just a few minutes.

About 30 minutes later, a second group of accounts, the « quoters, » share this fake content by posting it in the comments of targeted posts, often adding unique text or emojis to be more authentic. These accounts continue to share fake content like this for several hours, averaging a post every 45 seconds. Later, these accounts are reused for in future disinformation waves, alternating between the roles of « seeder » and « quoter ». For example, the account @wosuhitsu1972 participated in at least five operations within two weeks before being suspended.

Bots or real people behind the accounts?

VIGINUM’s analysis revealed that many accounts used in this campaign appear to be hacked or purchased from specialized companies, such as WebMasterMarket, which sells X accounts. Most of these accounts display AI-generated profile pictures, lack biographies or location information, and have few or no followers.

While the posting of fake content seems automated, human errors—such as typos, comments within a thread rather than as replies to the target’s post—suggest that some accounts may be managed by real people rather than bots. Targeting mistakes reinforce this idea, with operators that can target the same accounts many times or improperly spell fake official accounts.

Targets of the campaign

VIGINUM reports that Western countries are the primary targets. But other regions, including Ukraine, the Balkans, the Middle East, and parts of Asia, Africa, and Latin America, are also affected. Russian opposition media and figures in Belarus and Iran face similar targeting. The majority of targets include well-known media outlets (AFP, BBC, USA Today), fact-checking and anti-disinformation organizations (EU Disinfo Lab, France 24 – Info ou Intox, FactCheck Bulgaria), influential public figures, and investigative journalists like Christo Grozev. Government agencies, NGOs, universities, investment funds, and political parties can also be targeted.

At the scale of a country, the targets seem to be attacked in the same order, suggesting that operators use a pre-defined list. Since the campaign’s beginning, in September 2023, around 500 X accounts have been targeted (including about 40 French accounts). The target list is regularly updated, but some are systematically targeted such as national or international press agencies.

1 comment

Normally I do not read article on blogs however I would like to say that this writeup very forced me to try and do so Your writing style has been amazed me Thanks quite great post